Despite Elon Musk’s repeated claims that cracking down on child sexual abuse material (CSAM) on Twitter is “priority #1,” evidence continues to show that CSAM persists on Twitter.

According to a new report from The New York Times in conjunction with the Canadian Centre for Child Protection, not only was it easy to find CSAM on Twitter, but Twitter actually promotes some of the images through its recommendation algorithm.

The Times worked with the Canadian Centre for Child Protection to help match abusive images to the centre’s CSAM database. The publication uncovered content on Twitter that had previously been flagged as exploitative. It also found accounts offering to sell more CSAM.

During the search, the Times said it found images containing ten child abuse victims in 150 instances “across multiple accounts.” The Canadian Centre for Child Protection, on the other hand, ran a scan against the most explicit videos in its database and found over 260 hits, with more than 174,000 likes and 63,000 retweets.

“The volume we’re able to find with a minimal amount of effort is quite significant. It shouldn’t be the job of external people to find this sort of content sitting on their system,” Lloyd Richardson, technology director at the Canadian Centre for Child Protection, told the Times.

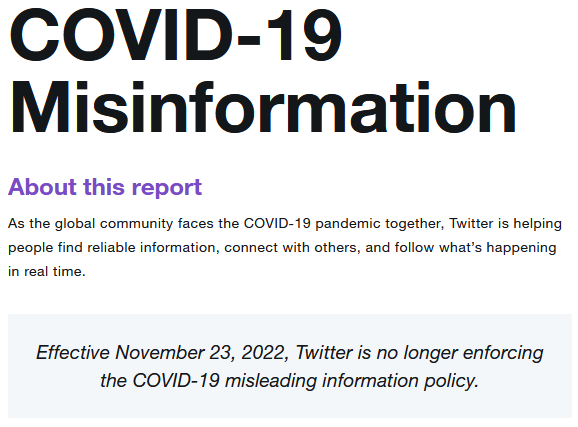

Meanwhile, Twitter laid off a significant number of its employees and contract workers in November 2022, including 15 percent of its trust and safety team — which handles content moderation. At the time, Twitter claimed the changes wouldn’t impact its moderation.

Later that same month, Musk granted a “general amnesty” to banned Twitter accounts, allowing some 62,000 accounts to return to the platform (which included white supremacist accounts). At the same time, reporting revealed that Twitter’s CSAM removal team was decimated in the layoffs, leaving just one member for the entire Asia Pacific region.

In December 2022, Twitter abruptly disbanded its Trust and Safety Council after some members resigned. Musk accused the council of “refusing to take action on child exploitation” even though it was an advisory council that had no decision-making power. Former Twitter CEO Jack Dorsey chimed in to say that Musk’s claim was false, but Musk only doubled down on claims that child safety was a “top priority.”

In February, Twitter said that it was limiting the reach of CSAM content and working to “suspend the bad actor(s) involved.” The company then claimed that it suspended over 400,000 accounts “that created, distributed, or engaged with this content,” which the company says is a 112 percent increase since November.

Despite this, the Times reported that data from the U.S. National Centre for Missing and Exploited Children shows Twitter only made about 8,000 reports monthly — tech companies are legally required to report users even if they only claim to sell or solicit CSAM.

You can reach the Times report in full here.

Source: The New York Times Via: The Verge